From data to action: AI system enhances mortality surveillance at HealthServ Los Baños

)

Trained on over 26,000 admission records, the tool helps identify unusual mortality trends, guide timely interventions, and strengthen quality governance.

The vast amounts of clinical data generated by hospitals daily offer opportunities to uncover trends and guide improvement initiatives. But sorting through the data to extract key insights can be challenging.

Recognising this issue, HealthServ Los Baños Medical Center in the Philippines developed a data-driven, AI-assisted system to monitor and predict mortality rates. This would support hospital leadership better identify unusual mortality patterns and prioritise potentially preventable deaths for review.

“The growth in clinical data presented a timely opportunity to use clinical endpoints like mortality to strengthen performance monitoring and quality improvement. But it also raised a caution: without robust methods for interpreting these data, there’s a real risk of drawing inaccurate – or even harmful – conclusions from flawed assumptions,” said the hospital.

Mortality rate was selected as the outcome metric of focus, as it was a more reliably coded and objective outcome than morbidity; also, existing quality initiatives already addressed morbidity-related concerns, such as infection control and near-miss medication events. At the same time manual mortality chart reviews were labour-intensive, and vulnerable to clinician bias and inconsistency.

Developing an AI-assisted mortality surveillance system

A multidisciplinary team led by a clinician-researcher, in close collaboration with the medical records division and quality management office – with oversight from the medical affairs department and the medical director – designed the system with three main functions in mind:

- First, it needed to offer risk-adjusted mortality estimates (i.e., predicted risk of death) to account for varying patient characteristics across time and departments.

- Second, it needed to provide timely alerts of significant deviations from historical baselines.

- Third, it needed to generate interpretable outputs that could inform review processes without overwhelming staff.

“The short-term goal was to increase the efficiency of chart screening by flagging unexpected deaths using an objective, reproducible risk framework. The intermediate aim was to enable learning from avoidable deaths,” said the hospital. “Ultimately, the system sought to improve patient outcomes through informed quality improvement initiatives guided by audit findings.”

Inside the data and modelling process

Data from over 26,000 hospital admissions from 2016 to 2024 (excluding the pandemic period of 2020 to 2023 due to its atypical mortality patterns) was used to develop the mortality prediction model. These included both structured entries (such as demographics and discharge outcomes) and unstructured entries, particularly free-text diagnosis fields. As these diagnoses were authored by a broad range of clinicians and provided a detailed and nuanced account of each patient’s clinical course, they were especially valuable for AI modelling, the hospital noted.

Subsequently, the data were processed through a sequential AI pipeline. First, free text was processed using natural language processing, accounting for local clinical terminology and informal coding practices. Next, a random forest model identified variables most predictive of mortality. These were then entered into a logistic regression model to estimate predicted mortality risk scores of individual cases.

The hospital also used two types of statistical process control to monitor and control data. Cumulative sum (CUSUM) charts were used to track hospital-wide all-cause mortality over time. Expressed in terms of excess deaths, they served as an early warning signal for systemic issues.

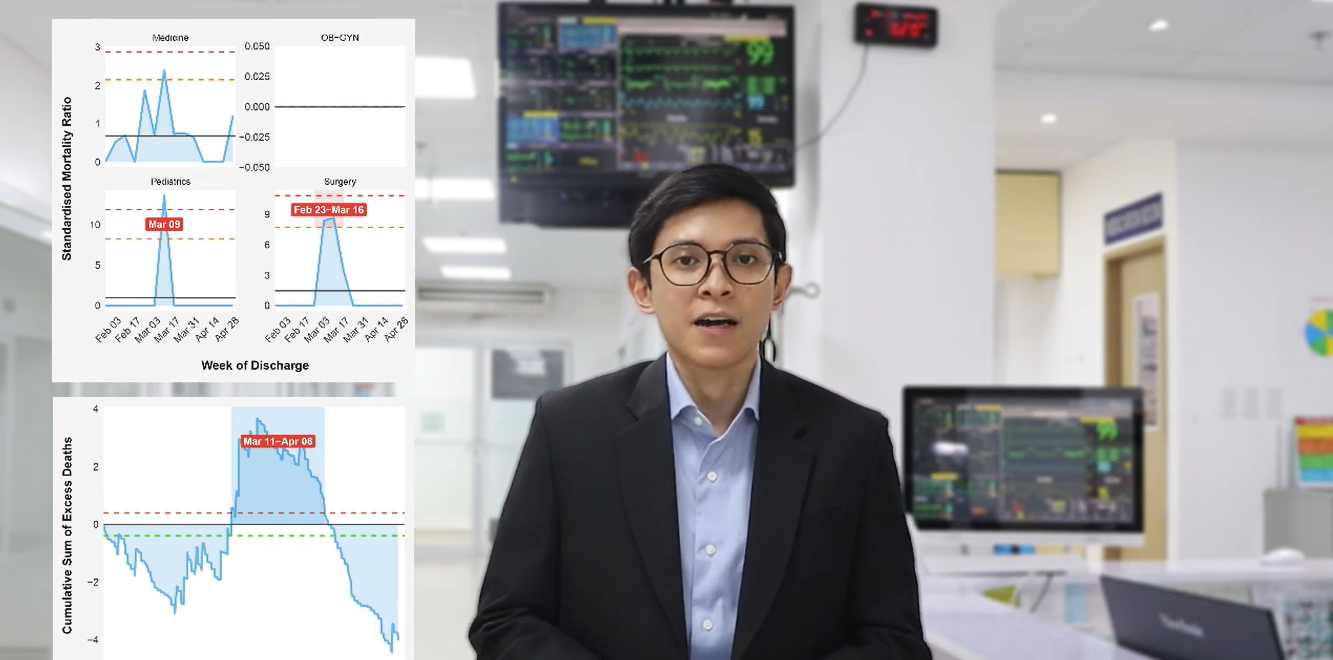

Shewhart U-charts of standardised mortality ratios (SMRs) were used to detect department-level anomalies (significant deviations from expected values, set by a panel of clinical experts) in weekly mortality rates.

Validation results: accuracy, reliability, and efficiency gains

Validation of the model on over 1,000 admissions in early 2025 revealed strong accuracy and reliability.

The model was able to accurately distinguish between patients who died and those who survived, and its predicted risk scores closely matched observed mortality rates across risk groups. Its accuracy remained stable on new data, showing it was not just tailored to patterns in the training set.

The CUSUM charts helped highlight a significant cluster of excess deaths between February 23 and March 16, which prompted a review. Separately, the Shewhart U-charts revealed elevated mortality rates in Paediatrics and Surgery, guiding further audits that uncovered areas of improvement.

Overall, the system helped in identifying periods of special-cause variation and guiding more focused chart reviews. During the pilot, it reduced clinicians’ review workload by nearly half without missing any clinician-identified unexpected deaths.

“This project demonstrates how a locally developed, AI-enabled mortality monitoring system can strengthen clinical governance within a secondary-level hospital. It replaces crude mortality counts with risk-adjusted indicators that better account for patient complexity and variation in care delivery. This has supported more targeted audits and a more informed approach to performance monitoring,” said the hospital.

The system is highly reproducible and sustainable, being developed in R using open-source packages and relying on routinely collected administrative data. The AI techniques used (NLP and ensemble learning) were combined with classical statistical methods to enhance predictive performance while preserving transparency and clinical interpretability.

The hospital concluded: “The system was developed to be sustainable over the long term, with a modular architecture that supports future expansion into other domains, such as surgical site infections or readmission rates. Its transparent architecture also supports ongoing refinement by in-house staff without reliance on commercial vendors.

“This initiative highlights the value of investing in transparent, interpretable analytics tools that support meaningful oversight of clinical outcomes.”